SVM Loss Function

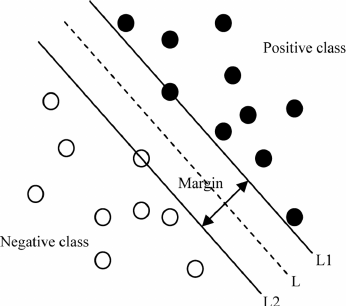

For the problem of classification, one of loss function that is commonly used is multi-class SVM (Support Vector Machine). The SVM loss is to satisfy the requirement that the correct class for one of the input is supposed to have a higher score than the incorrect classes by some fixed margin \(\delta\). It turns out that the fixed margin \(\delta\) can be any real number and it does not matter how big it is. We, therefore, let \(\delta=1\).

In CIFAR-10, we have the dataset of training images, \(x_i\) and its corresponding labels, \(y_i\). The linear score function \(f(x_i;W,b)\) computes a score vector for each training image, \(x_i\), where \(f(x_i;W,b) \in R^{10}\). Denote score vector for \(x_i\) be \(s\) where \(s=f(x_i;W,b)\). Then, each element, \(s_j\), in score vector \(s\) is the score for $j$-th class. That is, \(s_j=f(x_i;W,b)_j;j=1,.,10\)

Based on that notation declaration, multi-class SVM loss/hinge loss function is defined as following:

\[L_i=\sum_{j\ne y_i} max(0,s_j-s_{y_i}+1)\]Let’s compute the SVM/hinge loss for each input image:

| image1(class=cat) | image2(class=car) | image3(class=frog) | |

|---|---|---|---|

| cat | 3.2 | 1.3 | 2.2 |

| car | 5.1 | 4.9 | 2.5 |

| frog | -1.7 | 2.0 | -3.1 |

For image 1 where the ground truth class is cat,

\[\begin{align*} L_1&=\sum_{j\ne y_i} max(0,s_j-s_{y_1}+1)\\ &=max(0,5.1-3.2+1)+max(0,-1.7-3.2+1)\\ &=max(0,2.9)+max(0,-3.9)\\ &=2.9+0\\ &=2.9 \end{align*}\]For image 2 where the ground truth is car,

\[\begin{align*} L_2&=\sum_{j\ne y_i} max(0,s_j-s_{y_2}+1)\\ &=max(0,1.3-4.9+1)+max(0,2.0-4.9+1)\\ &=max(0,-2.6)+max(0,-1.9)\\ &=0+0\\ &=0 \end{align*}\]For image 3 where the ground truth is frog,

\[\begin{align*} L_3&=\sum_{j\ne y_i} max(0,s_j-s_{y_3}+1)\\ &=max(0,2.2+3.1+1)+max(0,2.5+3.1+1)\\ &=max(0,6.3)+max(0,6.6)\\ &=6.3+6.6\\ &=12.9 \end{align*}\]Overall, the loss for those three images are \(L=\dfrac{1}{n}\sum_{i=1}^nL_i=\dfrac{2.9+12.9+0}{3}=5.27\)

Ultimately, we want to minimize the loss by tuning our parameters, \(W,b\) . SVM wants the score of the correct class, \(y_i\), to be larger than the incorrect class scores by \(\delta\)

A last piece of terminology is the threshold at zero and \(max(0,-)\) is often called Hinge Loss. Sometimes, we may use Squared Hinge Loss instead in practice, with the form of \(max(0,-)^2\), in order to penalize the violated margins more strongly because of the squared sign. In some datasets, square hinge loss can work better. However, it is critical for us to pick a right and suitable loss function in machine learning and know why we pick it.